Comparative Analysis of SLAM Algorithms

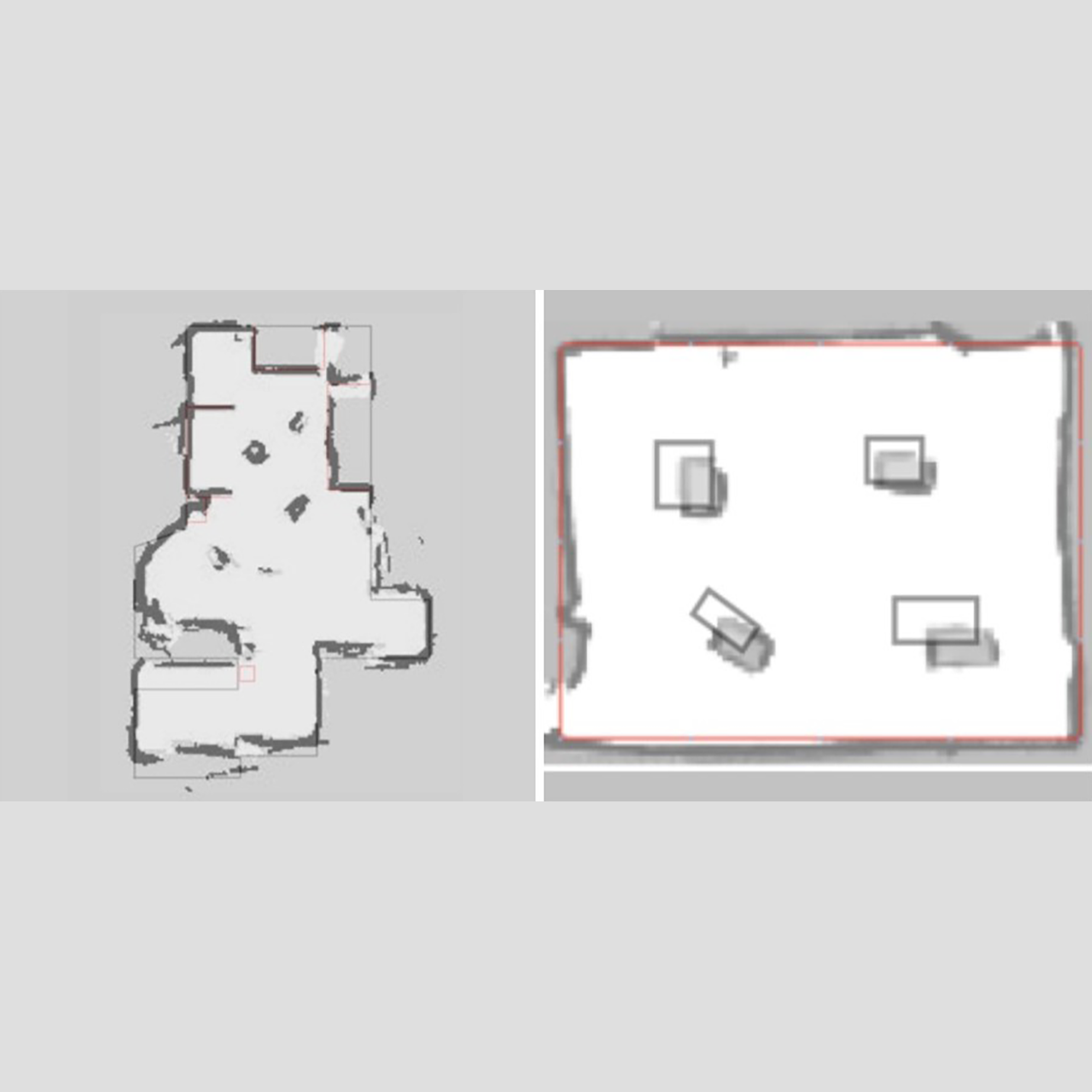

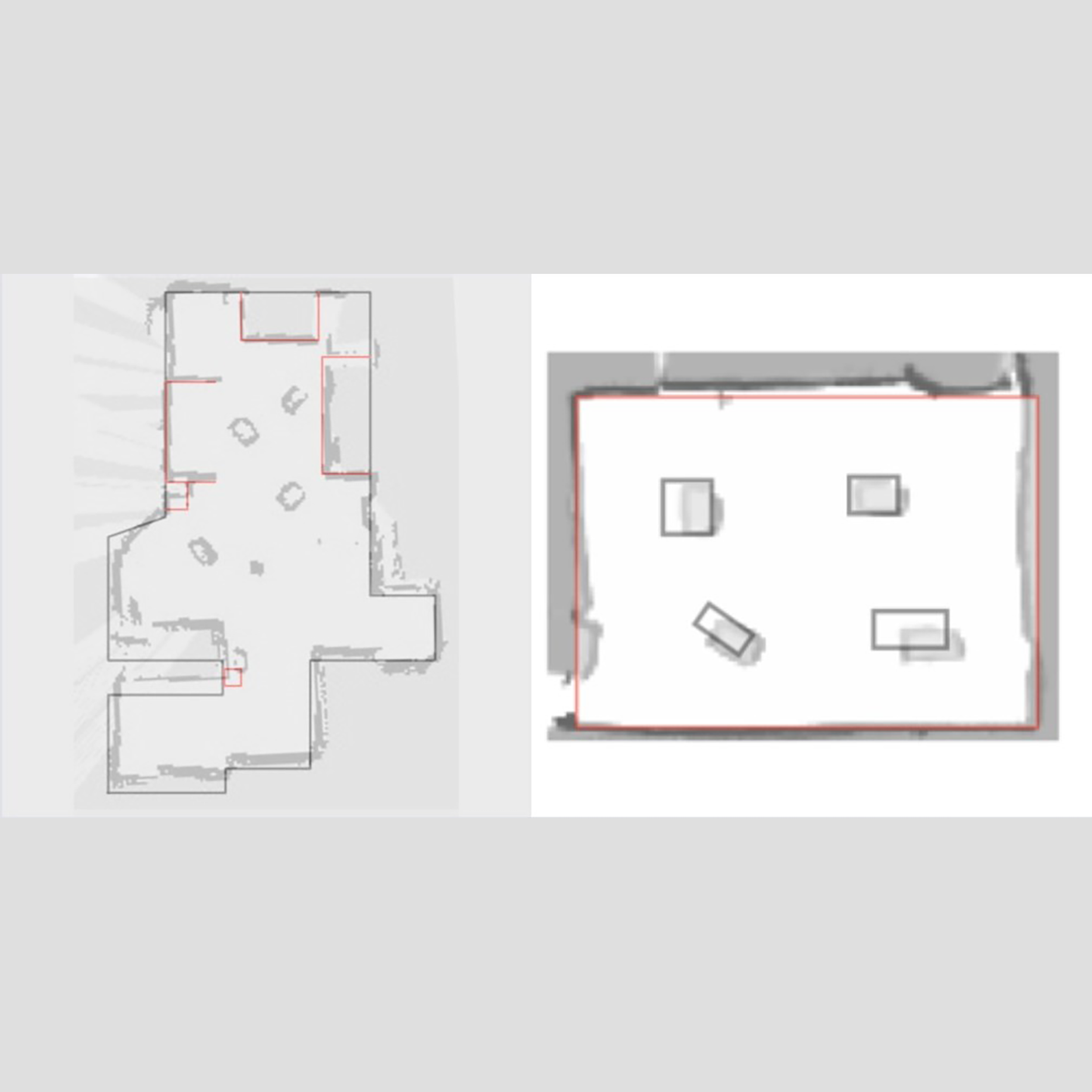

This project investigates the performance and accuracy of two widely used Simultaneous Localization and Mapping (SLAM) algorithms, GMapping and Cartographer, for indoor robotic navigation. SLAM plays a crucial role in enabling autonomous systems to map their environment and localize themselves within unfamiliar surroundings. In this study, we focused on GMapping, which utilizes a 2D grid-based particle filter approach, and Cartographer, which employs a graph-based method capable of both 2D and 3D mapping.

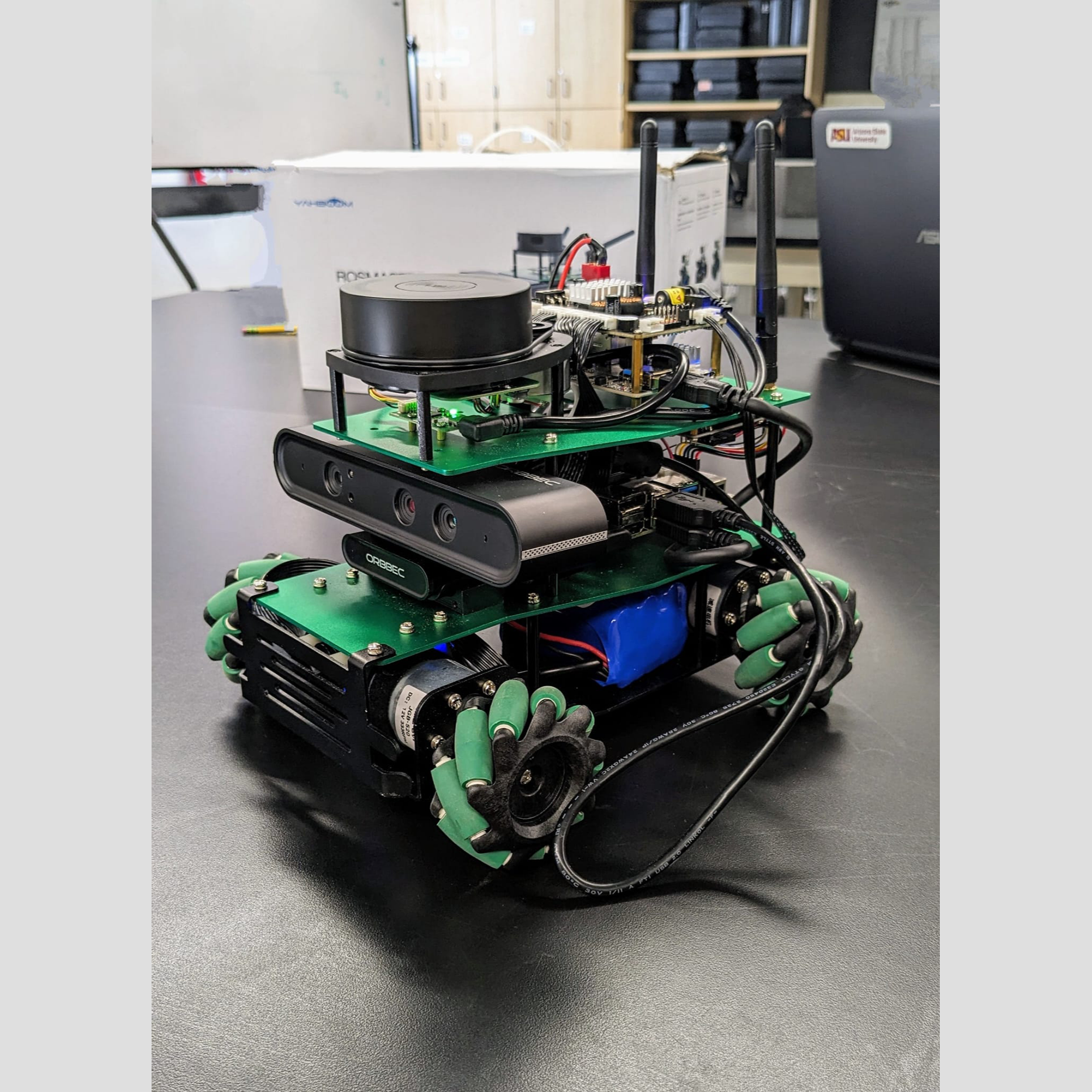

The experimental setup involved the Yahboom ROS Master X3 robot equipped with an RPLIDAR sensor, collecting real-time data across controlled indoor environments with varying levels of complexity. Mapping accuracy was quantitatively evaluated using Mean Squared Error (MSE) and Mean Absolute Error (MAE) by comparing the generated maps against ground truth references.

The key findings from this comparative analysis were:

- GMapping demonstrated superior performance in structured, simpler environments with fewer obstacles.

- Cartographer achieved higher accuracy in complex layouts containing multiple obstacles.

A significant challenge identified during this study was the presence of blind spots caused by the LIDAR's fixed mounting height (7 inches), which limited the detection of lower obstacles. Additionally, the absence of standardized evaluation methodologies for SLAM systems posed difficulties in achieving consistent quantitative comparisons.

To address these limitations, the project proposes the integration of additional depth sensors or cameras to enhance obstacle detection coverage and improve overall mapping reliability. This research provides valuable insights into algorithm selection, sensor configuration, and evaluation strategies, contributing to the development of more robust and adaptive autonomous systems.

By critically analyzing the performance of these SLAM algorithms under real-world constraints, the project emphasizes the importance of aligning algorithm capabilities with environmental challenges, reinforcing the vital role of SLAM in modern robotics.