EdgeVision: User Defined Object-Counting using Raspberry Pi

EdgeVision is a real-time object detection and counting system designed for resource-constrained embedded platforms, leveraging the Raspberry Pi 4 paired with its high-resolution Camera Module V2. The system integrates the Faster R-CNN ResNet-50 deep learning model, enabling accurate object detection and tracking directly at the edge. EdgeVision is tailored for practical applications such as public safety monitoring, traffic analysis, and retail analytics, where real-time insights are critical.

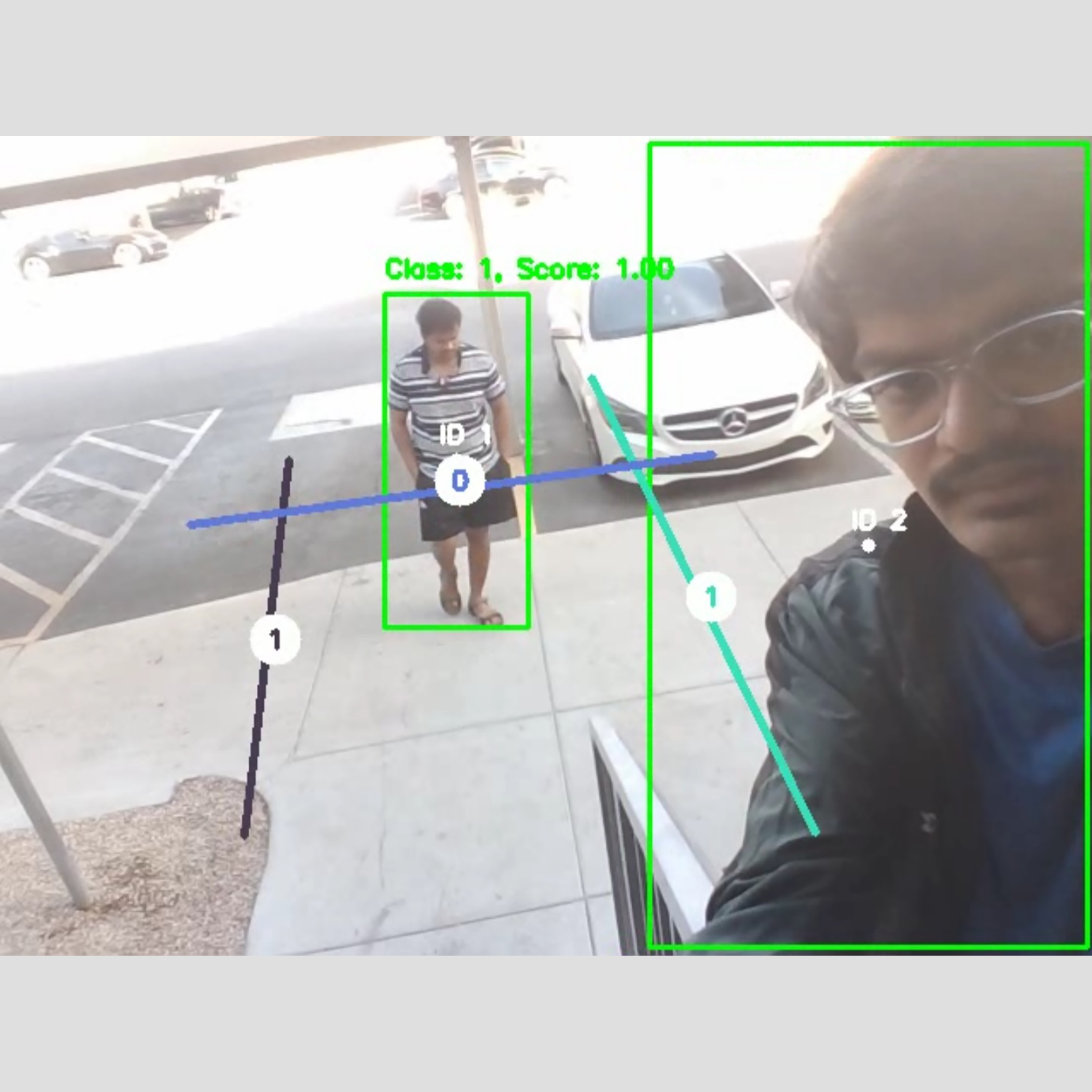

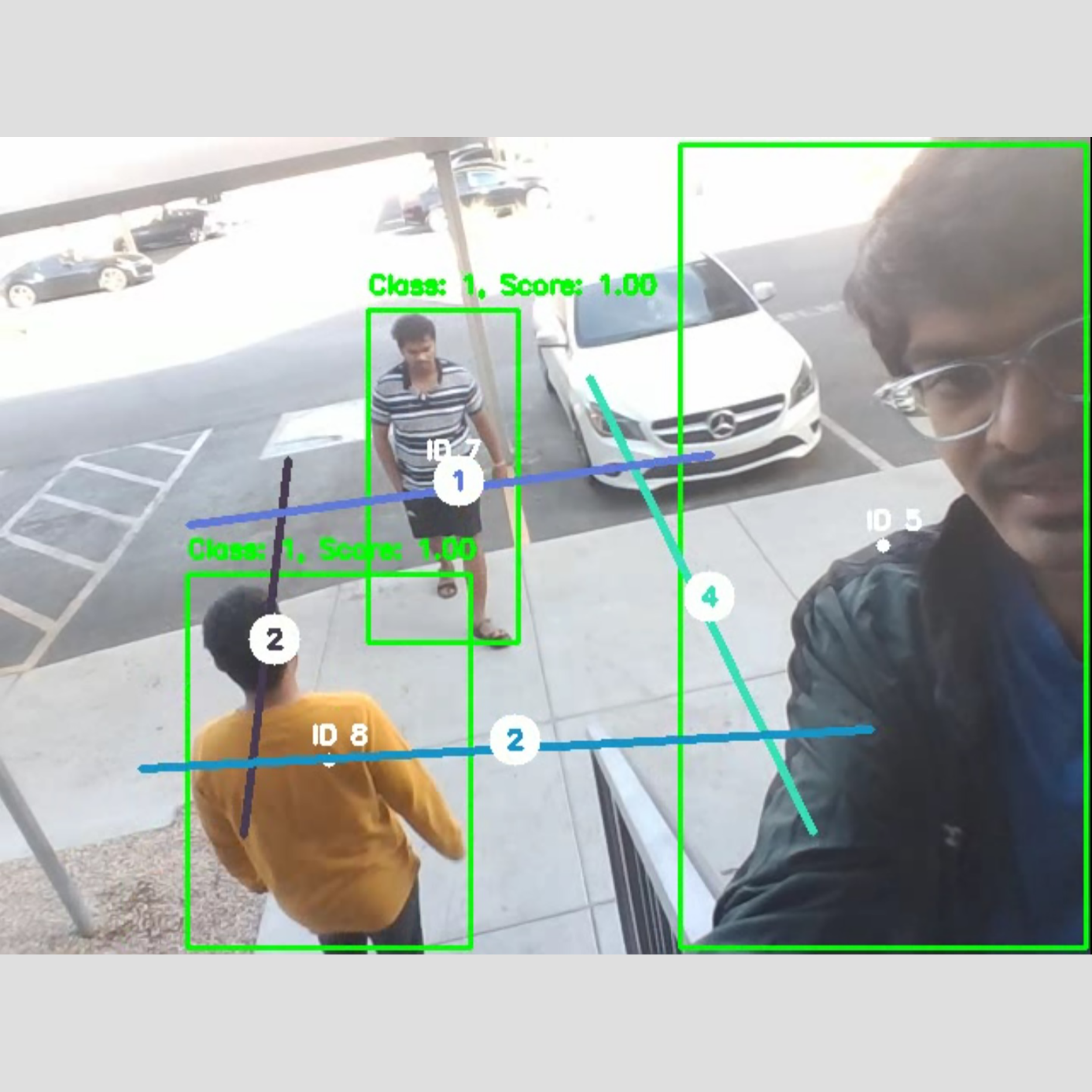

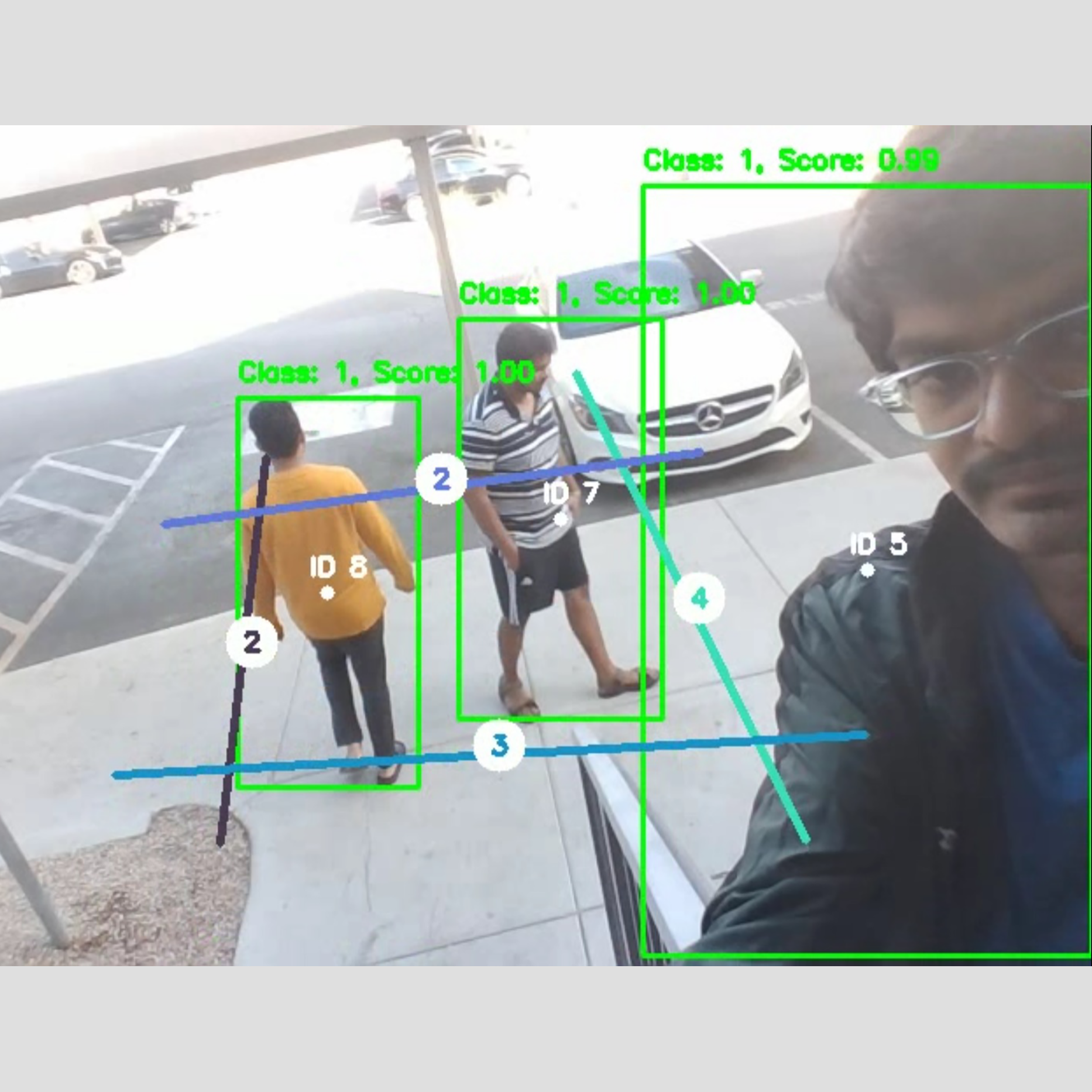

The architecture incorporates a CentroidTracker module, which maintains consistent object identification across video frames using a centroid-based nearest neighbor tracking algorithm. This ensures reliable performance even under challenging scenarios involving temporary occlusions or overlapping objects.

One of EdgeVision’s core innovations is its interactive, user-friendly interface. The system allows users to draw virtual line segments onto live video feeds using mouse interactions. These line segments act as dynamic counters, automatically tracking and counting objects as they cross the defined boundaries. This approach empowers users to configure counting zones flexibly without modifying the underlying code.

Through efficient model optimization and real-time video streaming, EdgeVision demonstrates how deep learning models can be effectively deployed on low-power embedded systems, bridging the gap between high-performance object detection and the practical constraints of edge computing environments.